Improved Semantic Segmentation and Depth Estimation

We use Diffusion-TTA to adapt semantic segmentors of SegFormer and depth predictors of DenseDepth. We use a latent diffusion model which is pre-trained on ADE20K and NYU Depth v2 dataset for the respective task. The diffusion model concatenates the segmentation/depth map with the noisy image during conditioning. We test adaptation performance on different distribution drifts.

Input

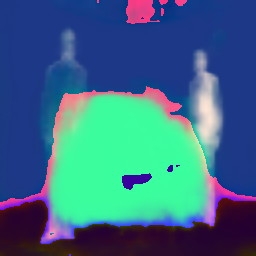

Before TTA

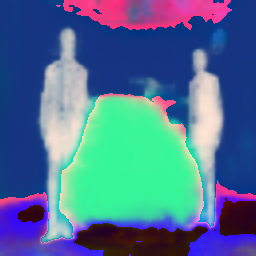

After TTA

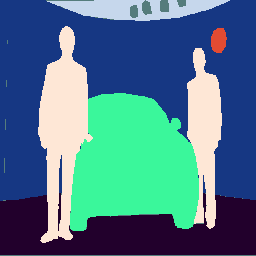

Ground-truth

| Task: Model | TTA | Clean | Gaussian Noise | Fog | Frost | Snow | Contrast | Shot | ||

|---|---|---|---|---|---|---|---|---|---|---|

| Segmentation: Segformer | ✗ | 66.1 | 65.3 | 63.0 | 58.0 | 55.2 | 65.3 | 63.3 | ||

| ✓ | 66.1 (0.0) | 66.4 (+1.1) | 65.1 (+2.1) | 58.9 (+0.9) | 56.6 (+1.4) | 66.4 (+1.1) | 63.7 (+0.4) | |||

| Depth: DenseDepth | ✗ | 92.4 | 79.1 | 81.6 | 72.2 | 72.7 | 77.4 | 81.3 | ||

| ✓ | 92.6 (+0.3) | 82.1 (+3.0) | 84.4 (+2.8) | 73.0 (+0.8) | 74.1 (+1.4) | 77.4 (0.0) | 82.1 (+0.8) | |||